Apple’s iOS 18 Launches With Built-In Eye Tracking for AAC Users

The wait is finally over — iOS 18 is available now. This major update to the iPhone and iPad operating systems introduces something very exciting: built-in eye tracking controls. The new feature is expected to revolutionize the accessibility of mobile apps and could be a gamechanger for augmentative and alternative communication (AAC) users in particular.

What is Eye Tracking and How Does It Work?

Eye gaze controls enable users to navigate their devices just by moving their eyes. The feature takes advantage of the selfie cameras already standard on mobile devices.

How to Prepare Your Device for Eye Tracking

iOS 18 became available on September 16, 2024. Unfortunately, some Apple devices are not compatible with the new feature even if iOS 18 is available to install. Don’t expect to find the eye tracking option on anything older than an iPhone 12 or iPad 10. These devices have lower quality cameras that cannot accurately track your eyes, so Apple has chosen to disable the feature on them.

Before you download iOS 18, there are a few things you might want to do to prepare:

- Free up space by deleting unused apps. iOS updates usually require multiple gigabytes of free space, so you should make sure you have the space ahead of time. You can navigate to Settings > General > iPhone Storage to find the apps taking up the most space.

- Consider backing up your device with iCloud. Although very rare, files can become corrupted during major operating system updates.

Like with previous iOS updates, upgrading to iOS 18 is simple. If you have Automatic Updates turned on and your device is connected to wi-fi and a charging cable, you should receive a prompt asking if you want to install the update overnight. If you don’t want to wait for that, you can also navigate to Settings > Software Update to manually initiate the update.

Once the operating system is updated, enabling eye tracking isn’t much more difficult.

How to Activate the New Eye Tracking Feature

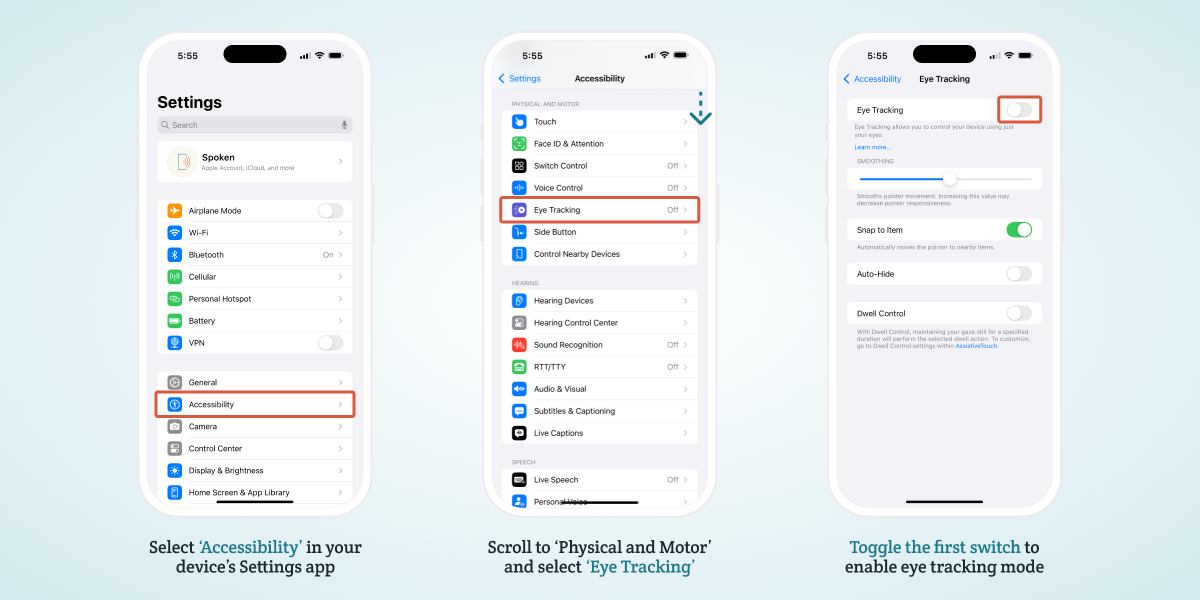

Once you’re running iOS/iPadOS 18 or above and are on a compatible device, you’ll be able to activate eye tracking via the Settings menu.

You can find the toggle under Settings > Accessibility > Eye Tracking.

For step-by-step instructions on how to enable and use iOS eye tracking, check out our previous blog: How To Control AAC With Eye Tracking (iOS 18 Tutorial)

Why iOS 18 Is Big For AAC Users

While eye tracking on iOS will undoubtedly have a massive effect on accessibility as a whole, we’re excited for the impact it could have on augmentative and alternative communication (AAC) users specifically. Communication challenges often coincide with physical disabilities or motor impairments that make it difficult or impossible to interface with a touch screen — cerebral palsy and ALS are just a couple examples of conditions that can affect both motor skills and speech.

People who need AAC to communicate but cannot use a touch screen have traditionally resorted to costly, dedicated AAC devices with added eye tracking hardware. With the introduction of built-in eye gaze controls on iOS, it should now be possible for them to explore more affordable options in the form of mobile apps like Spoken.

Of course, you don’t need a motor impairment to use eye tracking. Now that it’s available as a built-in feature on common consumer devices, the barrier to try eye gaze controls is much lower and some users may find this new modality easier or more efficient to navigate with than using the touch screen. That potential added efficiency is great for AAC users, because communicating with AAC is generally slower than speech. Any added speed is helpful, so it might be worth checking out eye tracking for that alone.

Using Eye Tracking with Spoken

The learning curve for using Apple’s eye tracking is small. It’s simple enough to figure out quickly, but does have some issues with accuracy that can make it frustrating to use. We recommend experimenting with dwell time and sensitivity settings to find what works best for you.

During testing, we noticed some variability in how well eye tracking functions across different apps. We’ve worked hard to minimize these issues with Spoken and the improvements we’ve made to optimize eye tracking are available in Spoken 1.8.9.

Eye Tracking Compatibility Improvements In Spoken 1.8.9:

- Expanded Button Target Areas: While buttons appear the same visually, we’ve increased some of their invisible target areas to make them easier to select using eye tracking.

- Fixed Word-Icon Highlighting: Previously, Apple’s eye tracking would highlight words and their accompanying icons separately, treating them as two buttons. Now, both are highlighted together as a single button.

- New Scroll Options: We’ve added up and down arrows for scrolling since Apple’s Assistive Touch scrolling feature isn’t functioning correctly with Spoken. We believe some touch screen users will appreciate this addition as well. You can also scroll through one full page of predictions by looking at the bottom of the page, below the nav bar. A blue line will appear as an indicator that you’ve activated the otherwise hidden feature.

In the future, we plan to add a dedicated eye-tracking mode to improve the experience even further. Until then, enabling Large Print Mode in Spoken can make it easier to navigate and select words.

Real-World Tips for Using Apple’s Eye Tracking

While beta testing eye tracking, we discovered several ways to improve its performance. We believe that following these tips will help users get the most out of the new accessibility option.

- Put your device in portrait mode. It seems to work better in this orientation because the camera is centered on your face.

- Use a stand to hold your device in place and avoid repositioning in your seat. Movement will cause the device to quickly lose calibration.

- Keep your device around 1.5 feet from your face.

- Make sure you’re in a well-lit room, but avoid sitting in front of a light source because it will cloak your face in shadow.

- Try recalibrating if the eye tracking ever starts to feel off. There is an invisible shortcut at the top left of every page that will take you directly to the calibration screen if you look at it.

- Don’t worry too much about accuracy when typing — with ‘Zoom on Keyboard Keys’ enabled, selecting a key simply zooms in on that area without typing the letter, allowing you to adjust or confirm your choice before it’s entered.

- Avoid wearing glasses if possible. Unfortunately, glasses seem to impact the accuracy of Apple’s eye tracking, likely due to the screen’s own reflection.

Download iOS 18 Now

The time has come — it’s time to upgrade to iOS 18! Once you’ve updated, give the new eye tracking feature a try with Spoken. We’d love to hear your feedback on how the new iOS update works alongside our app! You reach out to us via email or through one of the social media sites listed in the website’s footer.

If you haven’t already, you can download Spoken - Tap to Talk AAC here.

About Spoken

Spoken is an app that helps people with aphasia, nonverbal autism, and other speech and language disorders.