How To Control AAC With Eye Tracking (iOS 18 Tutorial)

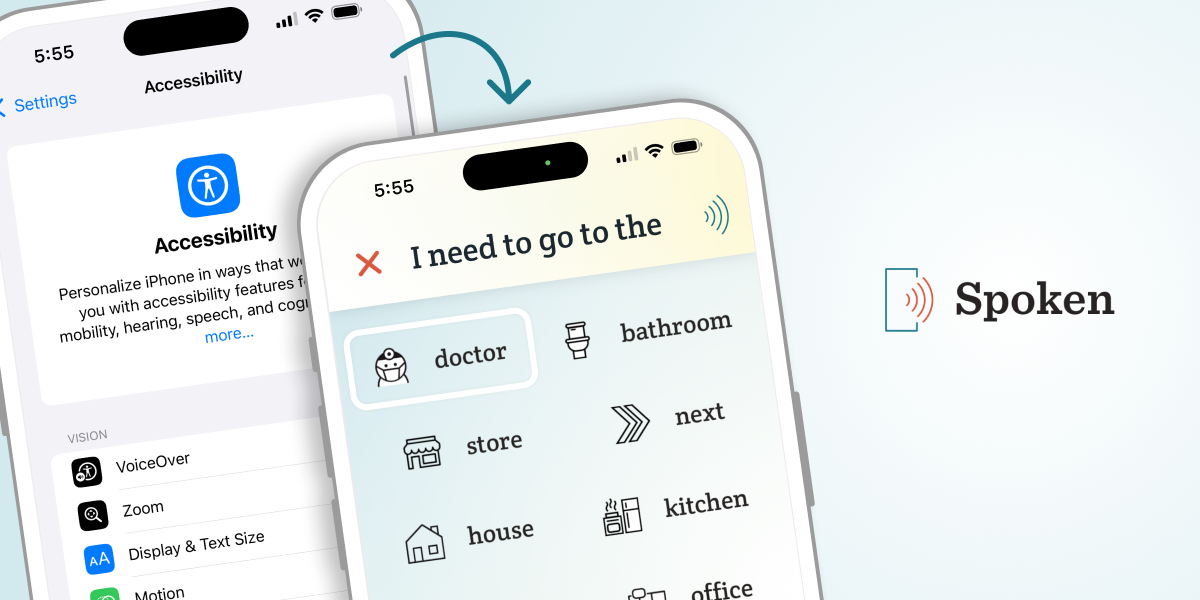

Apple just released iOS 18, which introduces an exciting new feature to certain iPhones and iPads: eye tracking controls. At Spoken, we’ve been beta testing this feature over the past couple months to ensure seamless compatibility with our app. We believe it will make Spoken and other AAC apps accessible to entirely new user groups and we’re very excited!

What is Eye Tracking?

Eye tracking is a hands-free way of controlling your device. Using the front-facing camera, the device can detect what you’re looking at on the screen. This allows you to navigate and interact with your device without the need for touch or voice commands.

Benefits of Eye Tracking in AAC Apps

The inclusion of eye tracking in iOS 18 could be significant for augmentative and alternative communication (AAC) users — people who have trouble speaking and rely on their devices to communicate. This new form of navigation will improve the accessibility of AAC for individuals with physical disabilities or motor impairments that make touch controls challenging or impossible to use. This includes individuals with conditions like ALS or cerebral palsy, who may not be able to use their hands. With eye tracking, it’s possible to navigate and control AAC apps like Spoken just by moving their eyes.

While eye gaze controls were already available on certain dedicated AAC devices, they weren’t widely available for apps until now. This is great news because AAC apps on consumer hardware like iPads tend to be much more affordable. Plus, you can use them for other things like texting or browsing the web. Due to all this, eye tracking being widely available for AAC iPad apps could be a game changing.

Existing AAC users may also benefit from the addition of eye tracking, even if they don’t have any physical disabilities. Some may find eye gaze faster or more efficient than touch controls. It can be rather intuitive once you get used to it.

How To Enable Eye Tracking on Your iPhone or iPad

If you haven’t updated to iOS 18 yet, simply navigate to the Settings app and scroll to “Software Update”. Once the update is installed, you can enable eye tracking on compatible devices. Unfortunately, eye tracking isn’t be part of the iOS 18 update on all devices — don’t expect to find it on anything older than an iPhone 12 or iPad 10.

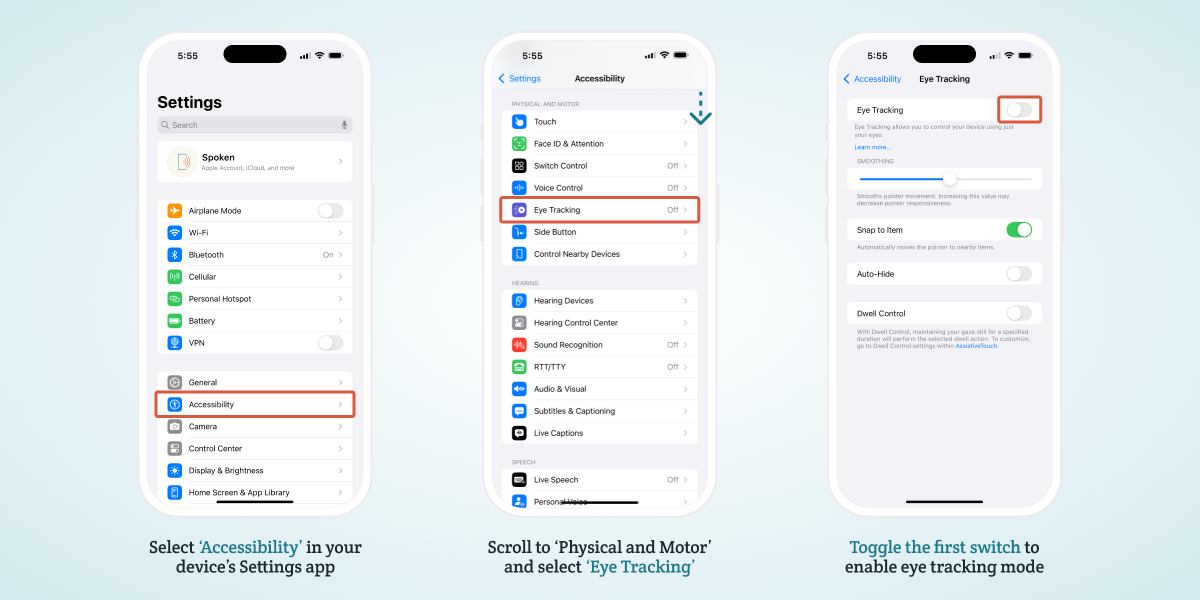

Once you have iOS 18 (or iPadOS 18) running on a device that supports eye tracking, you can enable the feature by navigating to your device’s settings.

Step-by-Step Guide to Enabling the New iOS Eye Tracking Feature

- Ensure you’re running the correct version of iOS or iPadOS (18 or above).

- Open the Settings app.

- Tap ‘Accessibility’.

- Scroll to ‘Eye Tracking’ (under the ‘Physical And Motor’ heading).

- Tap the first toggle to turn on the new feature.

- Follow the on-screen directions for calibration. You’ll be instructed to follow a circle around the screen with your eyes. For best results, place your device on a stable surface around 1.5 feet from your face.

- You’ll see a checkmark appear on-screen when you’re done — eye tracking will now be enabled and ready to use.

How To Use Eye Tracking on iOS

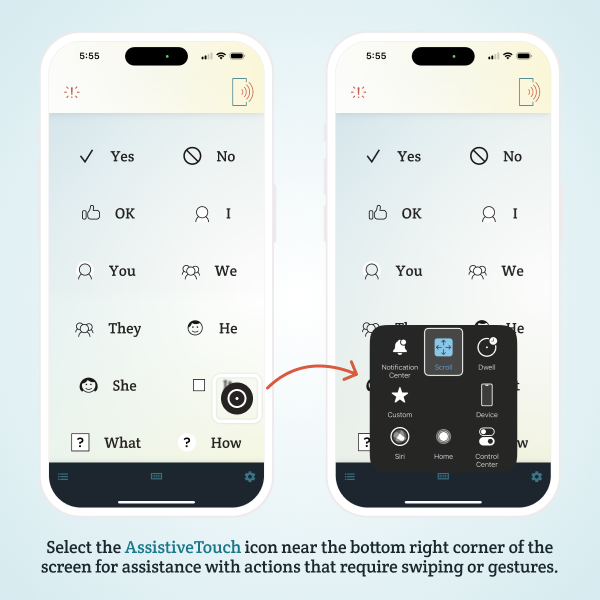

Using eye tracking to control your phone or tablet is simple. Once the feature is enabled, an invisible cursor will begin to follow your eye movements. When you look at an interactable element on the page, it will be highlighted with a white outline. If you maintain your gaze on that object, it will be selected or “clicked” and the chosen action will be performed.

The ability to select or click objects by fixing your gaze on them is called “dwell control.” In the Settings app, you can adjust how long you need to maintain focus (dwell) on an object before it will be selected.

Eye tracking pairs excellently with Apple’s existing AssistiveTouch feature, which will be automatically enabled when you activate eye tracking. This will make a circle appear in the bottom right corner of your screen, which will bring up a menu full of shortcuts to options that would usually require swiping or other gestures to access. From here you can also access scrolling options.

Does Apple Eye Tracking Work?

Apple’s eye tracking controls aren’t the most precise, but you can improve their performance by taking some simple steps. To get the most out of the feature, here’s what we suggest:

- Put your device in portrait mode. The vertical orientation seems to work best because the camera is centered on your face.

- Make sure you’re holding as still as possible; if you reposition in your seat or move your device, you’ll probably need to recalibrate. We strongly suggest using a stand for this reason.

- Keep your face about 1.5 feet from the tablet — this is the distance Apple recommends.

- If it feels off, the first thing you should do is try recalibrating. You can go directly to the calibration screen by moving the cursor to the top left corner of the screen, which is a configurable “hot corner” or shortcut.

- If you wear glasses, try removing them if you’re still able to read the screen. While eye tracking does work with glasses on, the accuracy may be impacted, likely due to the screen’s own reflection.

- Make sure you’re in a well-lit room. We quickly discovered that our dimly lit office space isn’t ideal for using this feature.

- Avoid backlighting — sitting in front of a light source like a window will cloak your face in shadow and make it hard for the camera to see your eyes. Conversely, too much light might wash out your face.

Can I Use Eye Tracking on Android Devices?

Unfortunately, eye tracking is not a built-in accessibility feature on Android yet and it’s unlikely to be added anytime soon. The accuracy of eye tracking is dependent on the camera’s hardware, which can vary greatly between Android devices.

About Spoken

Spoken is an app that helps people with aphasia, nonverbal autism, and other speech and language disorders.